Three-dimensional Human-computer Interaction Model Design for Chinese Pronunciation

Project Background

In the context of the global outbreak of the COVID-19 virus, the development of artificial intelligence in the field of education is gaining momentum, and online teaching is gradually becoming a learning method that everyone is willing to accept. The new teaching method also poses new challenges to the traditional classroom teaching model. Traditional classroom pronunciation teaching mainly relies on teachers to demonstrate specific actions through language, teaching aids, or body, allowing students to comprehend on their own. This teaching method is highly ambiguous and difficult to meet the needs of online teaching and computer-assisted pronunciation teaching feedback.

In terms of pronunciation teaching, in the absence of teaching aids and face-to-face demonstration and guidance from teachers, how to allow learners to quickly understand the essentials of phonetics learning and carry out effective imitation learning has become the direction of thinking for phonetics teachers under the new situation.

In recent years, computer-assisted pronunciation teaching technology (CAPT) has begun to be applied in pronunciation teaching online platforms, and various attempts have been made to better give learners feedback on pronunciation problems. Feedback on pronunciation scores through GOP (Goodness of pronunciation) can help learners to a certain extent to recognize the pros and cons of their pronunciation. For example, feedback on pronunciation problems in the form of pronunciation attributes can make learners more clearly recognize where their pronunciation problems are and how to correct them. However, without the on-site guidance of the teacher, through textual descriptions or overly professional guidance methods, learners find it difficult to capture changes in key pronunciation parts, and even cannot understand the specific designation of various parts of the oral cavity, making it difficult to produce correct pronunciation.

In terms of pronunciation phonetics research, some scholars have tracked the movement trajectories of the lips and tongue using EMA to obtain the lip and tongue dynamic data of each phoneme. From the perspective of pronunciation feedback, this method can reflect the oral changes of the target phoneme in a relatively intuitive way. However, the cost and difficulty of data collection and analysis in this way are high. The main manifestations are that the need to stick sensors to the specific positions of the speaker’s pronunciation organs will bring a strong discomfort to the speaker and affect the naturalness of pronunciation; the sensor electrodes fall off, which may cause data collection failure or inaccurate collection; the number of sensor electrodes is limited, and data modeling may face data sparsity and other problems. And the pronunciation data obtained in this way is difficult to analyze, and it is difficult to meet the flexible needs of pronunciation teaching.

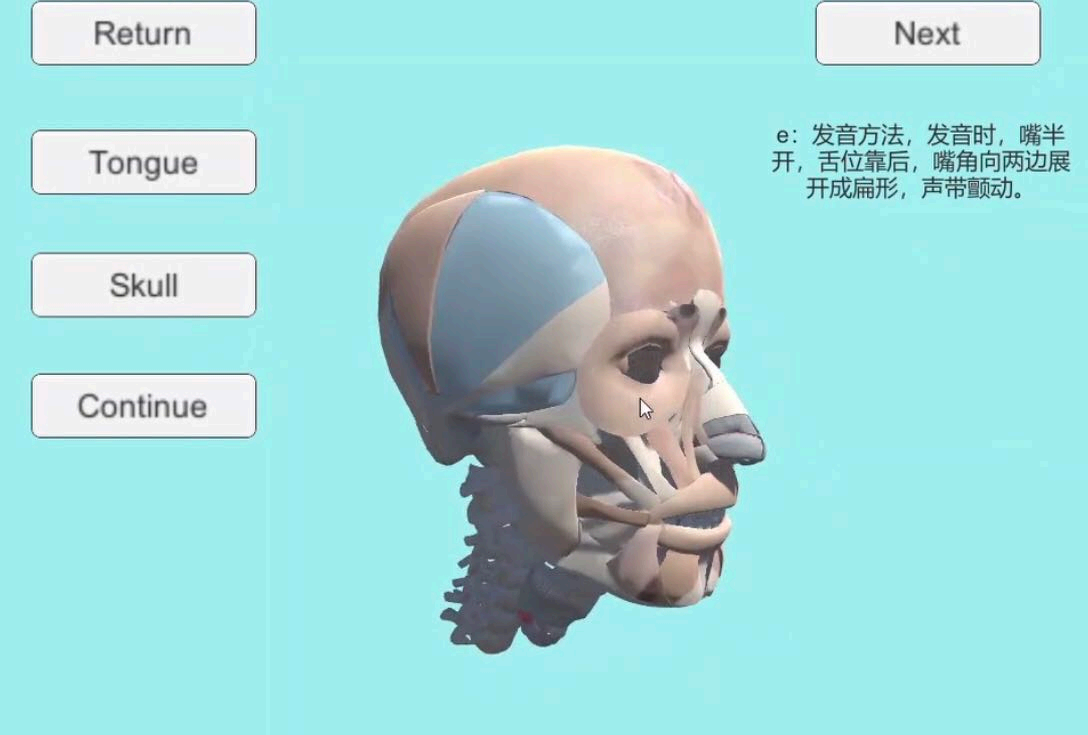

This project is based on a three-dimensional anatomical model of the human head, creating animations and designing interactions in Unity. Considering the situation where the phonetic organs inside the mouth are not fully visible or completely invisible, we start from the perspective of visualizing the phonetic organs. We construct a visualized three-dimensional virtual human head and its oral system that can generate synchronous speech animations, in order to achieve the best effect in learning phonetics.

Practical Significance

In response to the situation where the articulatory organs in the oral cavity are not fully visible or completely invisible, from the perspective of articulatory visualization, a three-dimensional virtual human head and its oral system capable of producing synchronized speech animation are constructed, so that the pronunciation method of Chinese phonetic symbols can be intuitively represented by a three-dimensional animation model. This project is based on a three-dimensional human head model, using knowledge of anatomy and pronunciation visualization to create a human oral anatomical simulation speech animation.

- Assisting in the classroom teaching of Chinese for international students studying in China. The visualization of this 3D virtual human head and its oral system through voice animation can aid teachers in classroom teaching, post-class evaluation feedback, and students’ online learning. By observing the way of vocalization in the simulated image animation, it can help Chinese learners master the correct way of vocalization.

- Helping in the teaching of Chinese pronunciation for children, the vivid 3D animation helps children understand and master Chinese pronunciation. At the same time, the 3D visualization of pronunciation, language teaching, and even human-computer interaction will play a positive role in promoting, with important theoretical significance and potential application prospects.

- For people with hearing impairments, they often cannot make a sound because they cannot hear the sound and do not know how to vocalize. The 3D visualized pronunciation animation solves this problem well, allowing people with hearing impairments to see how the vocal organs make sounds.

- The language problems of children with intellectual disabilities are becoming increasingly prominent. This system can be used to guide children with hearing impairments in tongue pronunciation position training. To overcome the invisibility problem in the tongue pronunciation movement process in the current language learning of children with hearing impairments, and improve the effect of pronunciation training for children with hearing impairments.

- At present, there is a lack of research on the visualization of Chinese pronunciation, especially through the visualization of three-dimensional models. Therefore, the generation of synchronized voice animation’s visualized 3D virtual human head and its oral system is very important in language learning, especially in second language learning and speech correction. In addition, this system can also be extended to the visualization of pronunciation teaching in English phonetics, Japanese fifty-sound chart, and so on.

Project Content

Overall Goal: To construct a user-friendly, three-dimensional virtual human head and oral system that can generate synchronized voice animations.

In the aspect of animation

- Based on the muscle movements of human oral pronunciation, construct and design three-dimensional animations of the movements of each vocal organ.

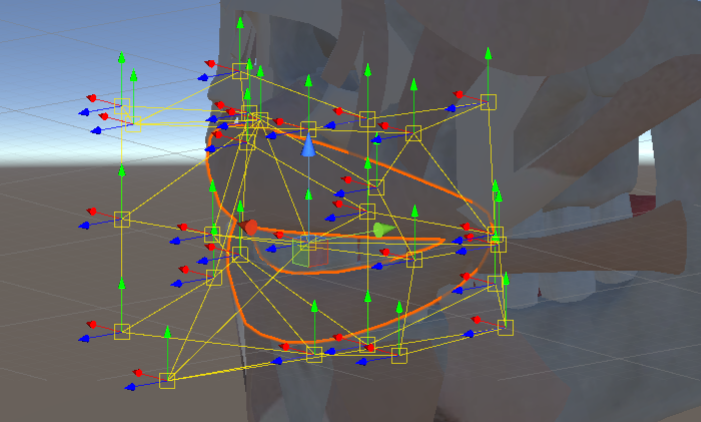

- For vocal organs such as teeth, hard palate, and lower jaw that only undergo minor deformations or even no deformation during pronunciation, consider them as rigid bodies and simulate their movements. For vocal organs such as the tongue and soft palate that undergo significant deformations during pronunciation, attempt to simulate their deformation effects. By calling the Unity toolkit, simulate the movement of special soft muscles like the tongue.

- Meanwhile, in terms of data collection, we try to visualize the Chinese pronunciation process by driving a three-dimensional physiological model with data from a Chinese Electromagnetic Articulograph (EMA).

In the aspect of interaction design.

- Establish a basic interactive framework. Achieve human-computer interaction effects through script programming, including the movement and rotation of the head perspective, selective semi-transparency of muscles, and other interactions.

- Optimize voice animation and interaction process. For the situation where the pronunciation organs inside the mouth are not fully visible or completely invisible, from the perspective of visualization of the pronunciation organs, better interaction design is carried out on the model so that users can more clearly observe the movement of the pronunciation parts. Optimization of interaction includes: reserving interfaces, reducing the number of control points, adding visual comparison sections for Chinese and English pronunciation, and so on.

Innovation Description

- Combining knowledge in the field of anatomy, a pronunciation feedback system has been developed. A professional anatomical human head model is used for the simulation and teaching of Chinese pronunciation. The pronunciation animation is based on a physiological anatomical three-dimensional model, involving the simulation of the movement of the pronunciation organs in the oral cavity. For pronunciation organs such as teeth, hard palate, and lower jaw, which only produce minor shape changes or even no shape changes during pronunciation, they are treated as rigid bodies and simulated by movements such as rotation and scaling. For pronunciation organs such as the tongue and soft palate, which produce a large amount of shape changes during pronunciation, the Free-Form Deformation (FFD) attribute is added to simulate their shape change effects.

- Innovation in application scenarios. There are almost no Chinese teaching software or programs on the market based on three-dimensional human head anatomical models, most of them are two-dimensional teaching animations. The changes in the most important pronunciation organ in the oral cavity - the tongue, cannot be seen when simulated with two-dimensional animations or real people. Now, we have incorporated knowledge of anatomy to create a user-friendly interactive Chinese pronunciation simulation system to achieve precise simulation effects. So far, no one has done this, and we have currently implemented basic vocal animations and simple interactions using Unity technology.

- This project applies anatomical three-dimensional animation to the scenario of Chinese language teaching, realizing innovation in application scenarios.

Enjoy Reading This Article?

Here are some more articles you might like to read next: